반응형

Review

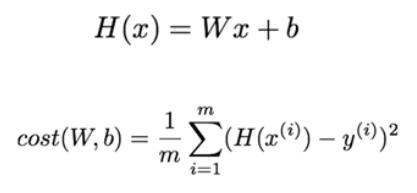

(H(x) = predicted value, y = true value)

W와 b를 조정해서 cost를 minimize한다.

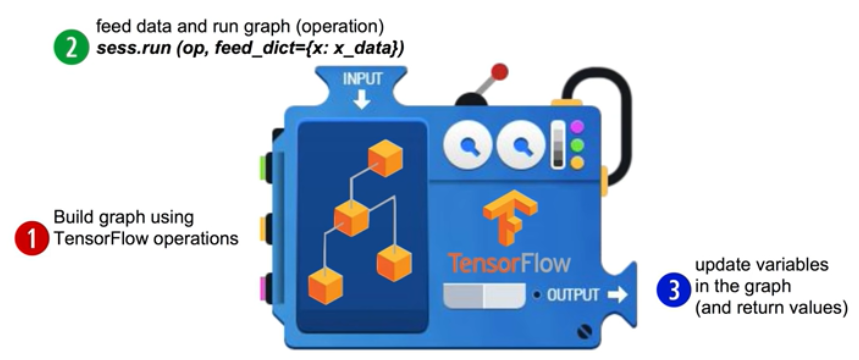

TensorFlow Mechanics

1. Build graph using

2. session run - 그래프 실행

3. graph update

1. Build graph using TF operations

# X and Y data

x_train = [1, 2, 3]

y_train = [1, 2, 3]

# Try to find values for W and b to compute y_data = x_data * W + b

# We know that W should be 1 and b should be 0

# But let TensorFlow figure it out

W = tf.Variable(tf.random_normal([1]), name="weight")

b = tf.Variable(tf.random_normal([1]), name="bias")

# Our hypothesis XW+b

hypothesis = x_train * W + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - y_train))

# optimizer

train = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(cost)

2 and 3. Run / update graph and get results

# Launch the graph in a seesion

sess = tf.Session()

# initialize global variables in the graph

sess.run(tf.global_variables_initializer())

# Fit the line

for step in ragne(2001):

sess.run(train)

if step % 20 == 0:

print(step, sess.run(cost), sess.run(W), sess.run(b))

Result

# Learns best fit W:[ 1.], b:[ 0.]

0 2.82329 [ 2.12867713] [-0.85235667]

20 0.190351 [ 1.53392804] [-1.05059612]

40 0.151357 [ 1.45725465] [-1.02391243]

...

1960 1.46397e-05 [ 1.004444] [-0.01010205]

1980 1.32962e-05 [ 1.00423515] [-0.00962736]

2000 1.20761e-05 [ 1.00403607] [-0.00917497]Full code with Placeholders

# Lab 2 Linear Regression

import tensorflow as tf

tf.set_random_seed(777) # for reproducibility

# Try to find values for W and b to compute Y = W * X + b

W = tf.Variable(tf.random_normal([1]), name="weight")

b = tf.Variable(tf.random_normal([1]), name="bias")

# placeholders for a tensor that will be always fed using feed_dict

# See http://stackoverflow.com/questions/36693740/

X = tf.placeholder(tf.float32, shape=[None])

Y = tf.placeholder(tf.float32, shape=[None])

# Our hypothesis is X * W + b

hypothesis = X * W + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

# optimizer

train = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(cost)

# Launch the graph in a session.

with tf.Session() as sess:

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

# Fit the line

for step in range(2001):

_, cost_val, W_val, b_val = sess.run(

[train, cost, W, b], feed_dict={X: [1, 2, 3], Y: [1, 2, 3]}

)

if step % 20 == 0:

print(step, cost_val, W_val, b_val)

반응형

'Deep Learning lecture' 카테고리의 다른 글

| ML lec 04 - multi-variable linear regression (*new) (0) | 2020.04.27 |

|---|---|

| ML lab 03 - Linear Regression 의 cost 최소화의 TensorFlow 구현 (new) (0) | 2020.04.27 |

| ML lec 03 - Linear Regression의 cost 최소화 알고리즘의 원리 설명 (0) | 2020.04.26 |

| ML lec 02 - Linear Regression의 Hypothesis와 Cost 설명 (0) | 2020.04.25 |

| 모두를 위한 딥러닝 강좌 (0) | 2020.04.25 |

댓글